AI, ChatGPT, and LLMs have become common sayings and since the release of ChatGPT to the public in November in 2022 there has been an astronomical rise in interest in AI. Many may see this as an overnight success but AI, or at least its development, has been around since the 1950s.

And there have been steady advancements in the AI field. I never really paid attention to AI in the earlier parts of my career because I mostly saw it as an academic endeavour and I was primarily focused on pure software engineering & the business and people aspects of it. Like with a lot of people, my views on AI have changed recently and this is mainly due to AI becoming mainstream. But what made AI mainstream? And what do developers need to know to get started with AI development?

The Revolutionary Impact of ‘Attention is All You Need’

In 2017 researchers at Google released a paper titled “Attention is All You Need”. If you’ve done any research on AI that’s deeper than “what is ChatGPT” you must have come across this paper being mentioned. The short of it is that the attention paper introduced a new way of training AI models with a the concept of Transformers. Transformers are a type of Neural Network. A Neural Network is a machine learning model that learns and takes decisions similar to a human brain.

OpenAI, which started in 2015, implemented the transformer concept and created and released several GPT versions. It was when they added a chat interface to their GPT models that they triggered the worldwide interest in AI that we see today. GPT stands for Generative Pre-trained Transformer.

Did You Know?

Dzmitry Bahdanau invented content-based neural attention that is at the core of deep-learning-based natural language processing. In other words, Bahdanau’s invention and work is the foundation of the seminal Attention Is All You Need paper.

https://rizar.github.io

So What Is An LLM?

LLM stands for “Master of Laws”. It is an advanced postgraduate degree in law that is… Sorry about that slip of context (hallucination). LLM, in the context of AI, stands for Large Language Model. An LLM is a type of artificial intelligence model that excels in understanding and generating human-like text based on vast amounts of training data. The recent popularity of AI has been largely driven by LLMs running behind chat interfaces.

LLMs are transformer models trained on very large sets of data. Data scientist and machine learning engineers wrangle data and set up infrastructure required to train LLMs.

How Are LLMs Developed?

Training an LLM requires several steps and it generally starts with preparing the data that needs to be used to train it (of course the compute infrastructure has to be set up as well). Data cleaning and transformation are required in order to make sure that the data is good enough to be used. Remember that vast amounts of data is required to successfully train an LLM. In fact it is becoming evident that smaller models can perform at the level of higher models when they undergo prolonged training on quality data. Think long-trained models (see X thread below from Andrej Karpathy).

Once the data required is prepared then the configuration of the LLM is carried out and this could involve determining the number of layers, attention heads, and hyper-parameters. This allows for the training of the model to commence. This isn’t usually a small task because due to the large amounts of data required a lot of infrastructure is required and this infrastructure has to be setup and configured to suit the training needs.

After these processes are completed, the model may be fine-tuned. The performance of the model may be evaluated by getting it to process testing datasets. It is also common to have these models evaluated on language fluency, coherence, and perplexity. LLMs may also be subjected to questionnaires, common-sense inferences, multitasking and factuality to evaluate their performance.

When the training and evaluation of an LLM is deemed complete, it is then deployed for use. Deploying and operating an LLM requires a lot of infrastructure and specifically Graphic Processing Units (GPU). In addition to that, unimaginable amounts of RAM are needed. LLMs may have a large number of parameters or a smaller number of parameters. Generally, the smaller the model, the less infrastructure required to run it. There are models that are small enough to run locally on high-end personal computers.

Deploying an LLM is usually not the last step as the performance of the model needs to be monitored consistently to ensure it operating with the expected parameters.

Roles In The AI Industry

The AI industry has evolved over many years and there are numerous roles that have evolved with it. Some of these roles are as below:

- Data Scientist: Works with complex data sets to extract insights and build predictive models using machine learning algorithms.

- Machine Learning Engineer: Develops and deploys machine learning models into production systems, optimising them for scalability and performance.

- AI Research Scientist: Conducts theoretical and applied research to advance the field of artificial intelligence, often working on cutting-edge algorithms and techniques.

- Data Engineer: Builds and manages the infrastructure for collecting, storing, and processing large volumes of data used in AI projects.

- AI Architect: Designs the overall architecture of AI systems, selecting appropriate technologies and components to meet performance, scalability, and reliability requirements.

- Data Analyst: Examines data sets to uncover trends, patterns, and insights that inform decision-making and strategy.

- Domain Expert: Provides subject matter expertise in specific industries or domains, guiding AI development efforts to address domain-specific challenges and opportunities.

- AI Trainer/Annotator: Labels and annotates data sets used to train machine learning models, ensuring high-quality training data for accurate model performance.

The Software Developer’s Role

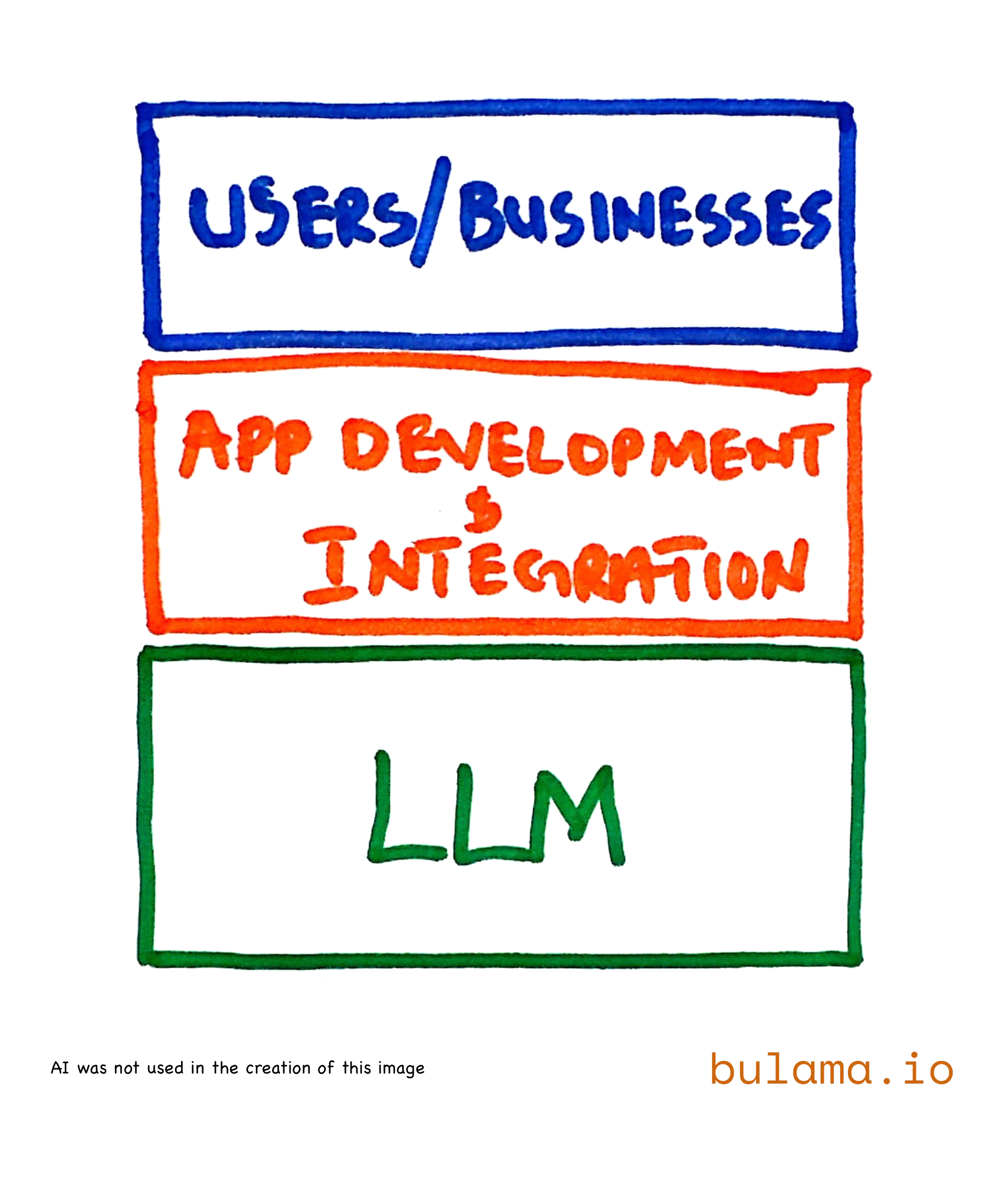

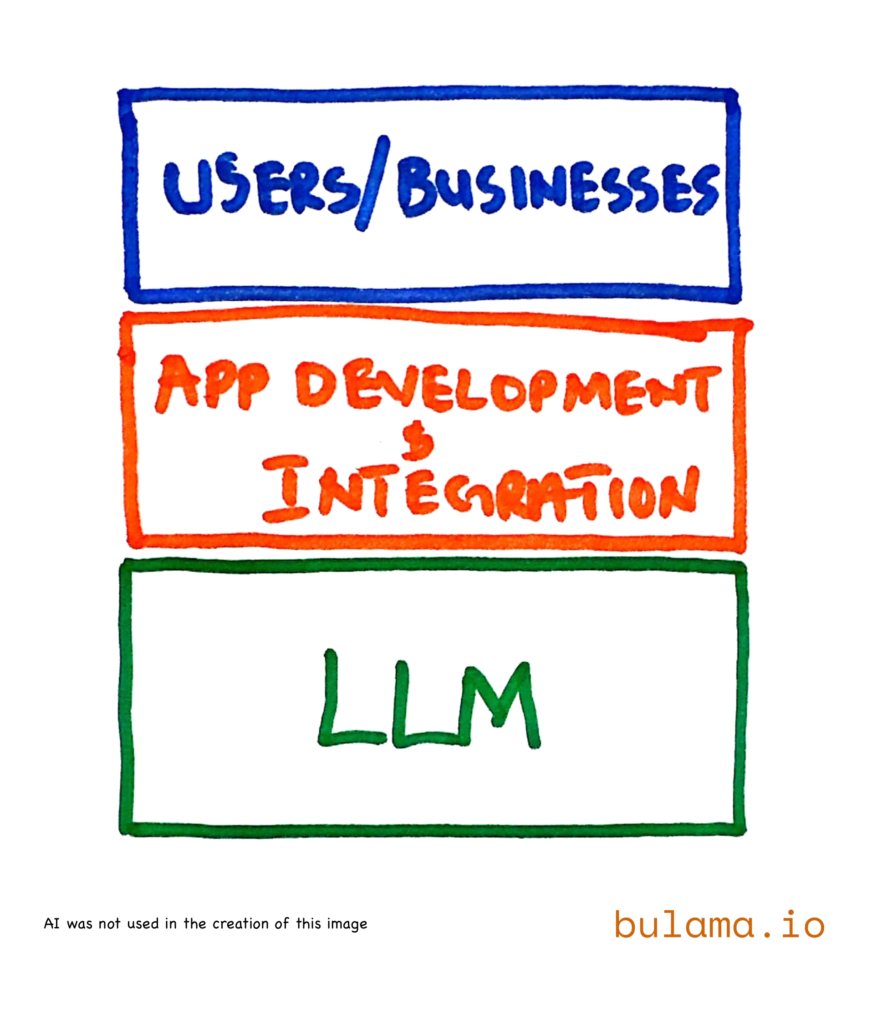

Software developers build things and as a software developer you have already been doing this. Building with AI is very similar to building with anything else. You need to understand what it is, how it works, and how to integrate with it. LLMs that are deployed usually have APIs you can connect to and integrate into your system.

With the rise in popularity of AI more and more businesses are looking to integrate and introduce AI into their operations. To do this, businesses would need to identify what benefits they wish to get from AI and which parts of their business they want to integrate it into. When these questions are answered the next thing is usually to build and integrate the AI powered system.

To build and integrate AI into systems developers need to understand concepts such as:

- LLMs: Already defined above. Developers need to understand that are models that are fine-tuned for specific domains and applications. For example there are models that are tuned for chatting (GPT-3.5 Turbo), some are tuned for medical applications (Med-PaLM 2), while others are focused on crunching and understand business data. And of course there are LLMs that excel in the world of programming and coding. There are proprietary and open source models.

- Prompting: The main means of communicating with a model. Instructions are communicated to a model via prompts. Prompts can instruct the model on what its role is, supply what the question being asked is, provide additional contextual data for grounding its responses, and any chat history (especially in programmatic prompting). The quality of the prompts used to interact with models affect the quality of the responses generated by these models.

- Retrieval Augmented Generation (RAG): A way of building AI applications where data unknown to LLMs is used to ground it in the responses it generates. Due to the fact that there may be data that LLMs may not be aware of or that need to be directly referenced when generating responses, it is necessary to provide such data as context when interacting with LLMs. A typical RAG application

AI Development Tools

The AI community has developed and evolved a number of software tools, libraries, and websites that are used in:

- Training models

- Cleaning data

- Building ETL pipelines

- Sharing knowledge

- Sharing and ranking models

- Writing AI software and tools

There are also some programming and scripting languages that have become very popular in the AI space. I will talk about some of the tools, libraries, and languages below:

- TensorFlow: TensorFlow, developed by Google Brain, is an open-source machine learning framework. It furnishes a plethora of tools and libraries for constructing and deploying machine learning models, particularly focusing on neural networks. TensorFlow supports both research experimentation and production deployment with equal adeptness.

- PyTorch: PyTorch, nurtured within Facebook’s AI Research lab (FAIR), stands as an open-source machine learning library. Distinguished by its dynamic computation graph, PyTorch offers enhanced flexibility for crafting intricate neural networks. Widely embraced for deep learning and NLP research and development endeavours.

- Keras: Keras, a high-level neural networks API in Python, boasts interoperability with TensorFlow, Theano, or Microsoft Cognitive Toolkit. It facilitates rapid experimentation with deep neural networks, furnished with a user-friendly interface. Keras adeptly supports both convolutional and recurrent networks, catering to diverse development needs.

- NumPy: NumPy, a foundational package in Python’s scientific computing ecosystem, champions support for multi-dimensional arrays and matrices. Alongside an arsenal of mathematical functions, NumPy facilitates numerical computations pivotal in machine learning algorithms. It stands as an indispensable tool for developers embarking on machine learning endeavours.

- Jupyter Notebooks: Jupyter Notebooks, an open-source web application, offers an immersive environment for live coding, equations, and narrative text. Embraced by data scientists and researchers, Jupyter facilitates prototyping, experimentation, and documentation. It serves as a versatile canvas for weaving together code, visualisations, and insights.

- Apache Spark: Apache Spark emerges as a unified analytics engine, empowering developers with distributed computing capabilities. Its APIs, available in multiple languages, facilitate diverse workloads including batch processing, streaming, and machine learning. Spark’s fault tolerance and in-memory processing capabilities propel large-scale data processing endeavours.

- Microsoft Azure Machine Learning: Microsoft Azure Machine Learning, a cloud-based platform, streamlines the development, training, and deployment of machine learning models. With a comprehensive suite of tools and services, Azure Machine Learning fosters experimentation and deployment. Its seamless integration with frameworks like TensorFlow and PyTorch facilitates streamlined workflows.

- IBM Watson Studio: IBM Watson Studio, an integrated environment, fosters collaboration among data scientists, developers, and domain experts. Equipped with tools for data preparation, model development, and deployment, Watson Studio catalyses the exploration and construction of AI solutions. It offers capabilities for automating workflows and experimenting with advanced techniques.

- Hugging Face: Hugging Face describes itself on its website as follows “The Hugging Face Hub is a platform with over 350k models, 75k datasets, and 150k demo apps (Spaces), all open source and publicly available, in an online platform where people can easily collaborate and build ML together. The Hub works as a central place where anyone can explore, experiment, collaborate, and build technology with Machine Learning.”

- LangChain: LangChain is a framework for building LLM based applications. It encapsulates common concepts and approaches used in working with AI models. It is very common in the Python ecosystem.

- Ollama: Ollama is a tool that lets developers run and interact with LLMs locally. You can run LLMs using Ollama on your laptop or private server.

- Google Vertex AI: Vertex AI is a machine learning platform managed by Google Cloud. It allows you to train, deploy machine learning models as well as customising large language models.

Python is predominantly used in the machine learning, data, and AI ecosystems. Javascript is also popular with a lot of support for the common AI libraries. Believe it or not, Java has substantial support for developing AI applications especially through the SpringBoot AI framework.

Summary

This article takes a software developer’s perspective and delves into the transformative journey of artificial intelligence (AI) from its inception to its current mainstream status. Reflecting on the exponential rise in interest sparked by the release of ChatGPT in 2022, I acknowledge AI’s longstanding presence since the 1950s, despite its recent surge in popularity.

I shed light on the pivotal role of transformer models, particularly Large Language Models (LLMs, not related to law), in driving this surge, detailing the intricate process of LLM development. From data preparation and model configuration to training, evaluation, and deployment, I emphasise the indispensable nature of vast datasets and robust infrastructure.

Furthermore, I outline the diverse roles within the AI industry, from data scientists to software developers, each contributing uniquely to AI advancement. Additionally, I introduce prominent AI development tools, libraries, and platforms like TensorFlow, PyTorch, Hugging Face, and LangChain, essential for collaborative model building and application development.

- Intellectual Teams is recruiting developers into its talent pool. If you are a developer and interested in getting opportunities to work full-time remotely then you can apply to join the talent pool here.

- At Intellectual Apps we are building an AI-powered product named Breef Docs. Breef Docs allows companies and individuals to build personalised knowledge-bases and interact with them in a conversational manner. Join the Breef Docs waitlist